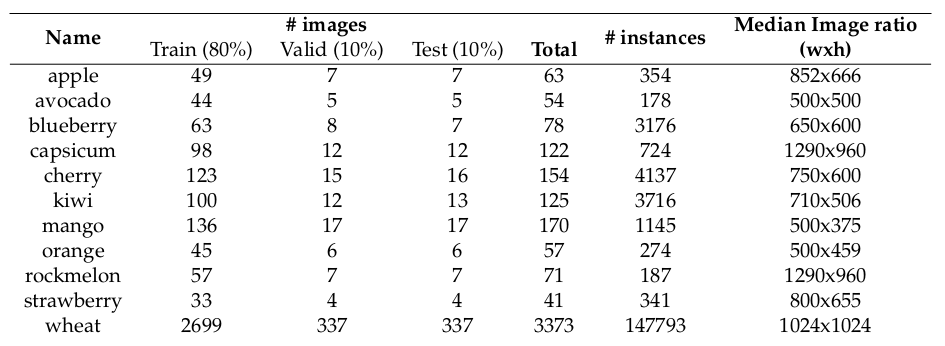

Fruit detection dataset

We also provide relatively small, yet useful bounding box annotations of 11 fruits. Note that 7 fruits such as apple, avocado, capsicum, mango, orange, rockmelon, and strawberry are obtained from our previous study1. However, we have updated the bounding box format, corrected human annotation mistakes, and more importantly, adopted cloud service (Roboflow) that enables easy sharing and collaborative annotations.

There are 3 new annotations in this dataset, blueberry, cherry, and kiwi that we annotated with high-precision. The wheat dataset is obtained from Kaggle's Global Wheat Detection competition.

Size of each dataset

| Dataset | Description | File Name | Size (GB) |

|---|---|---|---|

| deepNIR fruit detection | 11 fruits/crops | deepNIR-11fruits-yolov5.zip | 0.72 |

Number of data samples

Folder structure

Upon download completion, the compressed file, deepNIR-11fruits-yolov5.zip contains the following folders.

.

└── yolov5

├── apple

│ ├── data.yaml

│ ├── README.roboflow.txt

│ ├── test

│ ├── train

│ └── valid

├── avocado

│ ├── data.yaml

│ ├── README.roboflow.txt

│ ├── test

│ ├── train

│ └── valid

├── blueberry

│ ├── data.yaml

│ ├── README.roboflow.txt

│ ├── test

│ ├── train

│ └── valid

├── capsicum

│ ├── data.yaml

│ ├── README.roboflow.txt

│ ├── test

│ ├── train

│ └── valid

├── cherry

│ ├── data.yaml

│ ├── README.roboflow.txt

│ ├── test

│ ├── train

│ └── valid

├── kiwi

│ ├── data.yaml

│ ├── README.roboflow.txt

│ ├── test

│ ├── train

│ └── valid

├── mango

│ ├── data.yaml

│ ├── README.roboflow.txt

│ ├── test

│ ├── train

│ └── valid

├── orange

│ ├── data.yaml

│ ├── README.roboflow.txt

│ ├── test

│ ├── train

│ └── valid

├── rockmelon

│ ├── data.yaml

│ ├── README.roboflow.txt

│ ├── test

│ ├── train

│ └── valid

├── strawberry

│ ├── data.yaml

│ ├── README.roboflow.txt

│ ├── test

│ ├── train

│ └── valid

└── wheat

├── data.yaml

├── README.roboflow.txt

├── test

├── train

└── valid

45 directories, 22 files

Each subfolder (e.g., wheat highlighted in yellow) contains two files and 3 folders.

train,valid,testfolders containimagesand the correspondinglabels. (See more detail Bounding box format section)README.roboflow.txt: This auxinary file describes how/when the dataset was generated.-

data.yaml: This is a Yolov5 dataset configuration file with which you can directly train your model. By default, we assume the dataset is stored at/home/USERNAME/dataset, please update this path accordingly prior to model training.

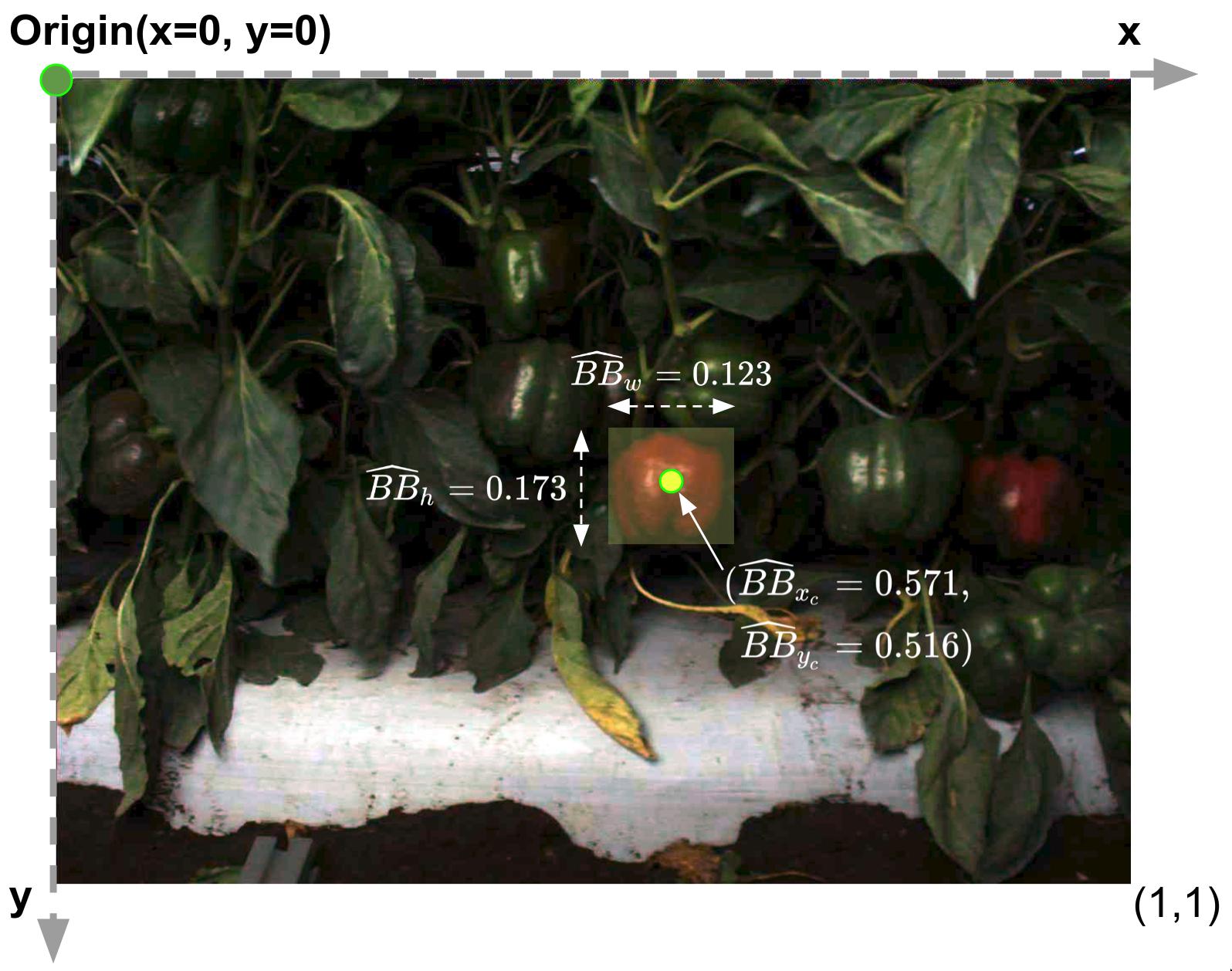

Bounding box format

We follow Yolov5's bounding box format. Each image in images folder has the corresponding txt file in labels. Below shows one of txt files as an example.

0 0.435546875 0.24583333333333332 0.11328125 0.17083333333333334

0 0.613671875 0.37916666666666665 0.12578125 0.12916666666666668

0 0.456640625 0.39479166666666665 0.13359375 0.16041666666666668

0 0.53046875 0.4140625 0.059375 0.140625

0 0.069921875 0.49114583333333334 0.11640625 0.19895833333333332

0 0.77578125 0.4973958333333333 0.140625 0.178125

0 0.571484375 0.515625 0.12265625 0.17291666666666666

0 0.9109375 0.5291666666666667 0.1234375 0.15833333333333333

0 0.11796875 0.06302083333333333 0.128125 0.12604166666666666

0 0.941015625 0.675 0.11796875 0.17916666666666667

0 0.881640625 0.7276041666666667 0.12109375 0.159375

Imporrant

The coordinates are normalized [0-1] meaning that each x, y coordinates are divided with the image width and height respectively. Using normalised bounding boxes may be advantagerous in model training, especially bounding box regression convergence and training time.

The figure above illustrates one bounding box highlighted in yellow from the above label txt file. It reads 0 0.571484375 0.515625 0.12265625 0.17291666666666666 implies that class_id is 0 and the centre of the bounding box is (\(\widehat{BB}_{x_c}\) = 0.571, \(\widehat{BB}_{y_c}\) = 0.516). Similary, \(\widehat{BB}_{w}\) = 0.123, \(\widehat{BB}_{h}\) = 0.173 indicate the normalised width and height of the bounding box.

These values are compuated as follows given the original image size of \(IMG_{w}\) = 1280, \(IMG_{h}\) = 960 in pixel. Assuming that we have a manual annotation \(BB_{x_c}\) = 731, \(BB_{y_c}\) = 495, \(BB_{w}\) = 157, and \(BB_{h}\) = 166 in an ordinary pixel coordinate system.

Tip

One can export this Yolov5 format into various formats such as COCO, or VOC that use [x1, y1, w, h] or [x1, y1, x2, y2] using Roboflow. Please have a look more detail from the Download section.